MCP Guide: Understanding The Protocol Powering the AI Agent Ecosystem

MCP Guide: Understanding The Protocol Powering the AI Agent Ecosystem

API protocols are everywhere. REST, GraphQL, SOAP, gRPC – we've seen countless patterns emerge over the years. But none of these were designed specifically for the AI era we're now living in. That's where MCP (Model Context Protocol) comes in.

Why you should care about MCP

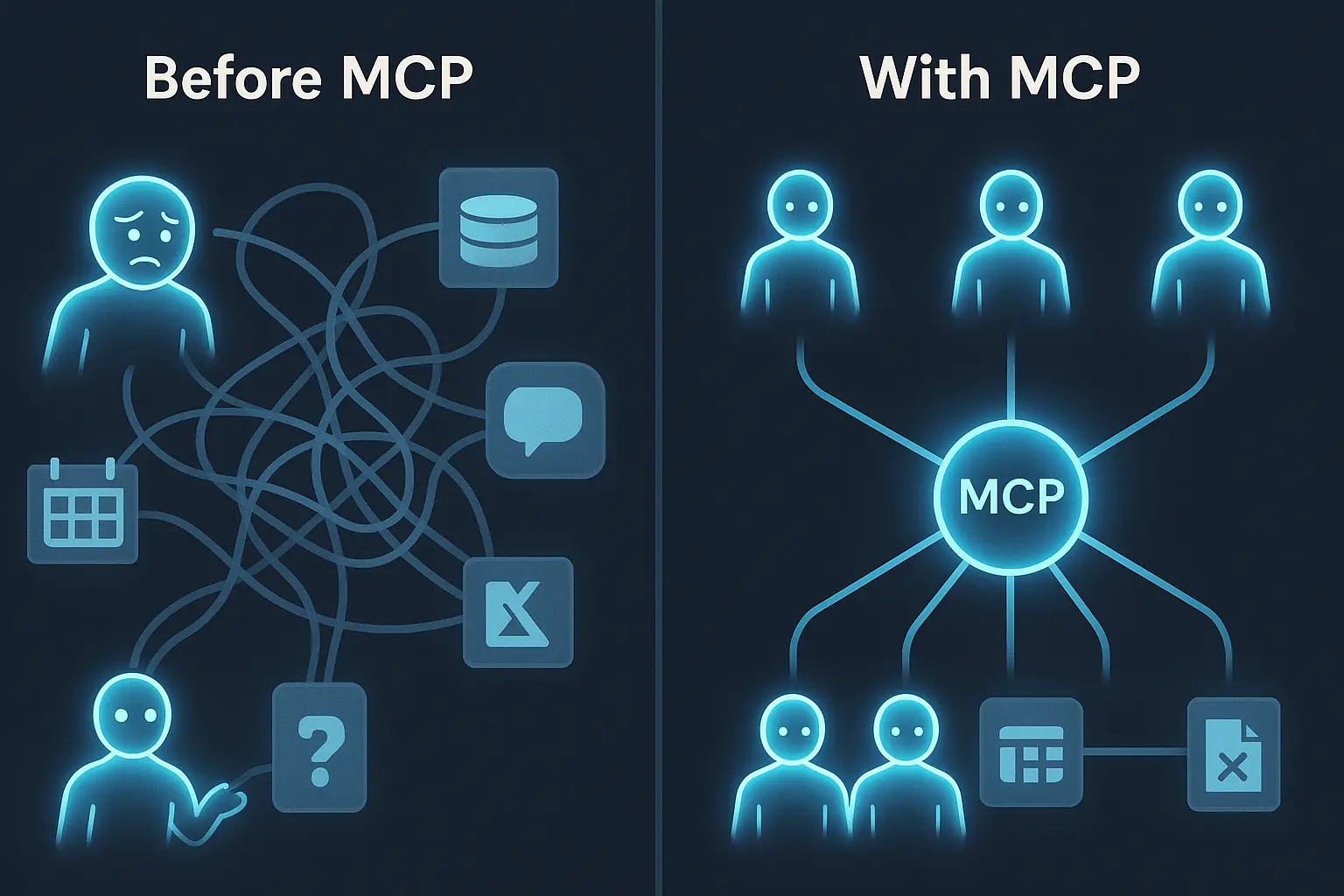

If you're building anything with AI agents or LLMs, you need to understand MCP. This isn't just another technical specification – it's the foundation that will power the next generation of AI applications. Without a standardized way for AI systems to communicate with services, we'll end up with endless custom integrations and fragmented ecosystems that slow down innovation.

And if you're not a developer but simply someone who uses AI tools? MCP is still worth your attention.

MCP solves this by creating a common language between AI agents and the tools they use. When we have a shared protocol, suddenly everything becomes interoperable – your AI agent can seamlessly work with any MCP-compatible service, unleashing entirely new workflows and capabilities. The developer who understands MCP today will be building the AI applications everyone uses tomorrow.

All the hype around MCP exists because it will fundamentally change what your AI assistants can do for you.

Imagine having an AI that doesn't just answer questions but can actually take actions on your behalf across all your favorite apps and services. Your AI could book appointments, analyze spreadsheets, edit documents, and coordinate between different services – all without you having to switch between applications or manually copy-paste information.

MCP is what will make your AI tools feel less like fancy chatbots and more like true personal assistants that understand the context of your work and can maintain that context across different tasks and services.

I've been thinking a lot about MCP lately. It feels like people are treating it as some magical buzzword – throwing it around without stopping to think about what it actually is. So let's break down what MCP really is, how it compares to alternatives, and why I believe it's the right protocol for the AI age.

What is MCP at its core?

MCP (Model Context Protocol) is exactly what the name suggests – a protocol. It's an API specification that defines how applications should communicate, specifically designed to work well with AI models and LLMs.

At its core, MCP is an RPC (Remote Procedure Call) type of API. Like any API, there's a server and a client, with the client sending commands to the server. This is fundamentally different from something like REST APIs, which are built around resources with CRUD operations mapped to HTTP verbs (GET, POST, PUT, DELETE, etc.).

The technical foundation of MCP

Let's get technical for a minute. When defining an API specification, you need to determine:

- Data format: What's the expected encoding of data?

- Transport mechanism: How is data transmitted between client and server?

- Communication pattern: What type of data is sent, and how?

For MCP, these look like:

1. Data Format: JSON

MCP uses JSON for data encoding. This makes perfect sense for two reasons:

- JSON is a well-understood, self-describing format

- LLMs are exceptionally good at generating and parsing JSON because their training data contains so many examples of it

The self-describing nature of JSON is particularly valuable here – the label and data are right next to each other, creating what we call "data locality." Compare this to something like Protocol Buffers (used in gRPC), which isn't as self-descriptive and requires more context.

2. Transport: From Local to HTTP

MCP was originally a local protocol designed to integrate with Claude desktop applications. Initially, it used STDIN and STDOUT – you'd send JSON as an input stream to a process, and the process would output responses to STDOUT.

But the specification has evolved. It now works over HTTP using server-sent events, allowing the server to stream back completions. This evolution makes sense because running everything locally limits what you can do. Remote MCP clients and longer agentic workflows that run in the cloud become possible with this HTTP-based approach.

3. Communication: Self-describing and Stateful

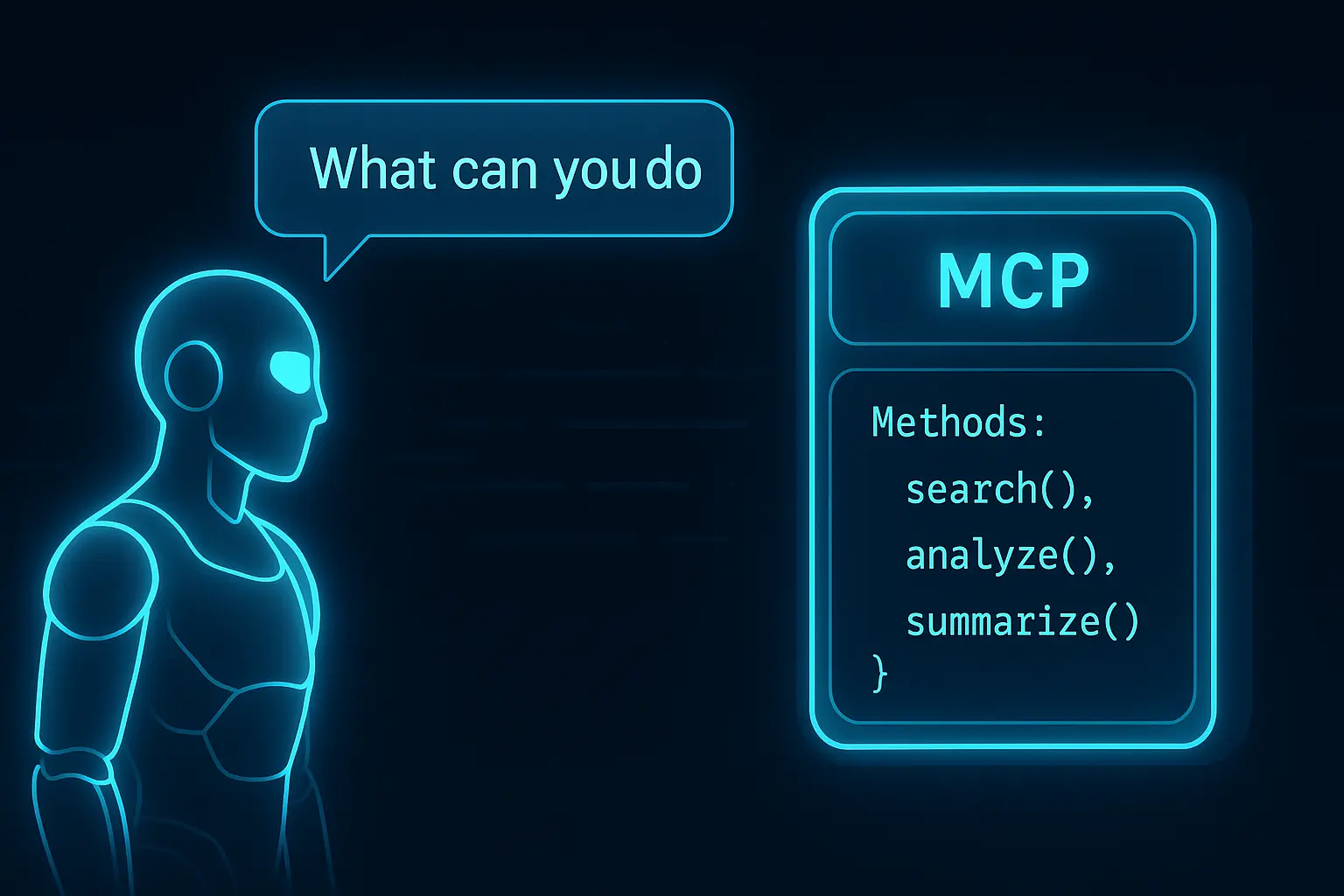

One critical aspect that makes MCP powerful is that the API itself is self-describing. The LLM can send a message like "describe yourself" to the MCP server, which then responds with a description of available methods and capabilities. This creates a beautiful interaction between the LLM agent and the MCP server – the agent asks "what can you do?" and the server responds "here's what I can do," allowing the agent to utilize the available functionality.

Perhaps the most crucial aspect of MCP is that the communication is stateful. When an MCP client initializes a connection, it starts a session, allowing for state on both the server and agent sides. This means the MCP server can maintain context and the MCP client can subscribe to changes – for example, if certain resources change, the server can publish those changes to the client.

Why stateful MCP servers are hard to build

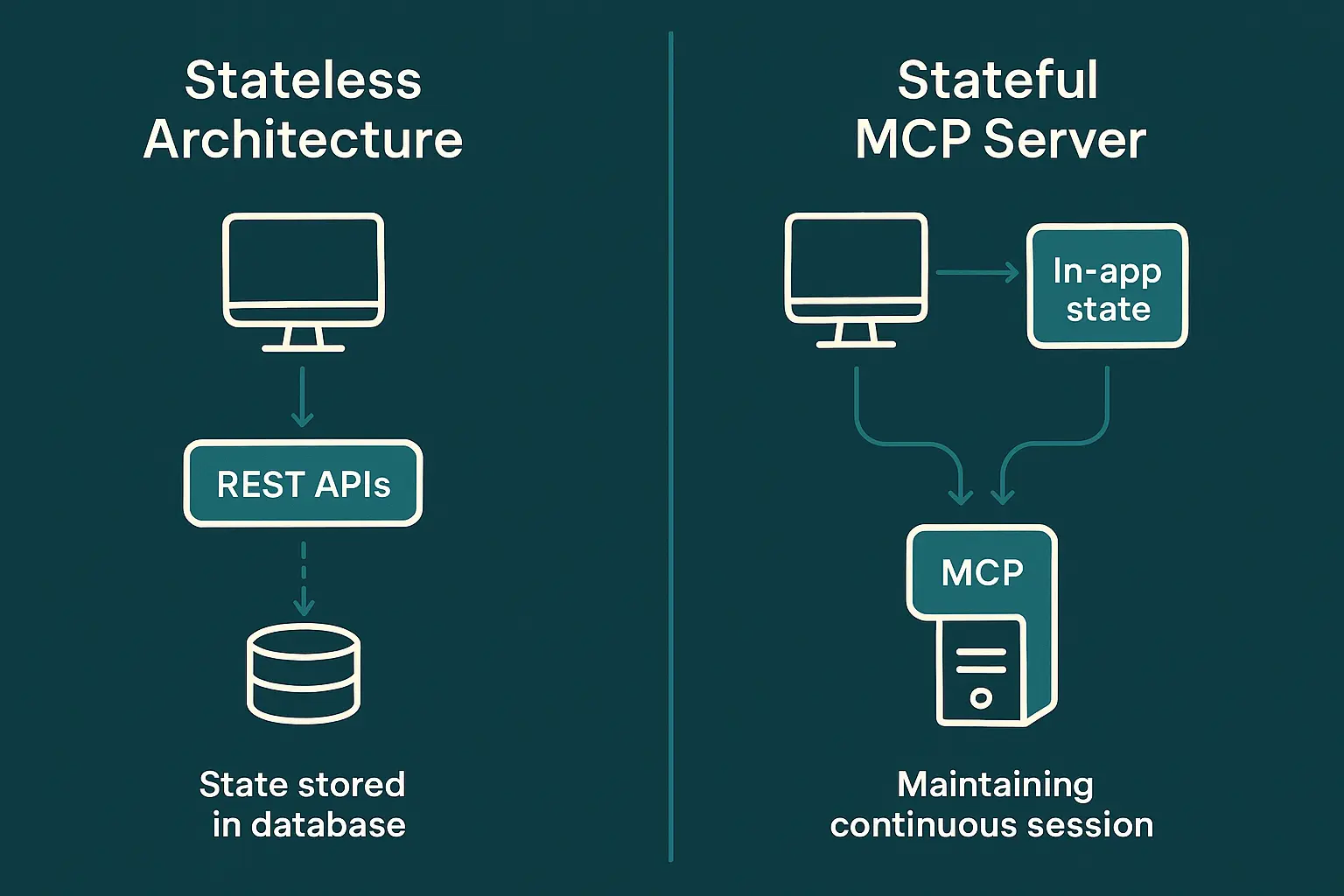

The stateful nature of MCP creates a significant challenge for modern developers. We've spent years trying to build stateless services! The industry moved toward stateless application layers to make scaling easier – state lives in the database and on the client side, not in our services.

This mindset shift is perhaps the biggest hurdle for many developers approaching MCP for the first time. Most of us have been trained to build services that scale horizontally with no state on the application server. We've designed our systems so that any request can be handled by any server instance – that's how we achieve high availability and scalability in cloud environments.

But MCP turns this model on its head. When an MCP client connects to a server, a session begins, and state accumulates. If that client disconnects and reconnects, we need mechanisms to route them back to their previous state. This fundamentally changes how we design our infrastructure.

Think about what happens when you deploy a new version of your stateless REST API – typically, you can just swap out instances without worrying about ongoing connections. With MCP servers, you need to handle session migration or graceful termination. You need to think about how state is persisted and restored.

This is why building good remote MCP servers is difficult for many developers today. When a client disconnects and reconnects to a session, we need to route the call to the correct place to return to the previous state and continue operating. This is fundamentally different from the serverless, stateless architectures many of us have been building for AWS Lambda, Cloudflare Workers, or Kubernetes.

Imagine trying to build an MCP server in AWS Lambda, where the function instance that processed the initial request might be completely gone by the time the next request comes in. Or in a Kubernetes cluster where pods are constantly being rescheduled. Without careful design for state management and session affinity, these environments make MCP implementation challenging.

This stateful nature also has implications for security and resource management. Since sessions persist, you need more sophisticated approaches to authentication, authorization, and resource allocation. You can't just validate a request in isolation – you need to track a user's entire session and manage their resources over time.

Why MCP makes sense for the AI era

The beauty of MCP lies in how well it's designed for use by LLMs. If you've ever tried building an API integration, you know it can be a nightmare – documentation often sucks and doesn't match implementation. We've tried addressing this with things like OpenAPI spec, but those were designed for a time when humans were the primary audience writing integrations.

This is where MCP truly shines. It was built from the ground up with AI consumption in mind. Think about what happens when an LLM tries to use a traditional API:

- It needs to parse human-oriented documentation

- It needs to figure out the correct endpoints and parameters

- It needs to handle authentication flows

- It needs to interpret responses and error codes

- It needs to maintain context across multiple API calls

Each of these steps is a potential failure point. Documentation might be incomplete or outdated. Error responses might be cryptic. The API might have implicit assumptions that aren't clearly stated.

MCP addresses these challenges through its self-describing nature. When an LLM connects to an MCP server, it can simply ask "what can you do?" and get a clear, structured response about available methods and capabilities. This dynamic discovery eliminates the need for perfect documentation – the API describes itself in a format that's optimized for machine consumption.

The JSON format is crucial here too. LLMs have seen countless examples of JSON in their training data, making them exceptionally good at generating and parsing it. The self-describing nature of JSON – where keys and values are paired together – creates a data locality that's perfect for LLMs to process.

Another critical aspect is that MCP was designed for complex, stateful interactions. Traditional REST APIs are great for simple CRUD operations, but they struggle with more complex workflows that require state. MCP's session-based approach allows for much more sophisticated interactions between an AI agent and a service.

Now we're building for machines and autonomous usage. Having a standardized specification like MCP makes it dramatically easier for LLMs to utilize APIs. The self-describing nature of MCP is particularly valuable here – agents can discover capabilities dynamically rather than requiring perfect documentation.

For AI agents to truly become powerful, they need to interact with a wide range of services seamlessly. MCP provides the common language for this interaction, allowing agents to discover and utilize capabilities across different systems without requiring custom integration code for each one.

Real-world example: Why standardization matters

Think about the current state of API integration. Every provider has different approaches:

- Different URL patterns

- Different ways of defining resources or actions

- Different authentication methods

- Different response formats

This inconsistency is manageable (though annoying) for human developers who can adapt and figure things out. But for LLMs, this inconsistency creates unnecessary challenges.

With MCP, we have a standard way for AI agents to:

- Discover what actions are available

- Understand how to use those actions

- Maintain state across interactions

This standardization is what will allow us to create truly powerful AI agent ecosystems.

Beyond the basics

There's much more to MCP than what I've covered here. Future explorations could include:

- Authentication and security in MCP

- Tooling for implementing MCP servers and clients

- Best practices for MCP server implementation

The client side is relatively straightforward – pointing to an endpoint, using libraries, and exposing the appropriate tooling. Most LLM frameworks already have tools like "MCP caller" built in. The server side is where things get interesting and challenging.

The future of AI integration

As we continue building with AI, the need for standardized protocols will only grow. MCP represents a thoughtful approach to this problem – designed with AI in mind from the start, rather than trying to retrofit existing patterns.

What excites me most about MCP is how it enables a whole ecosystem of AI tools to interact seamlessly. When your MCP server can publish changes to connected agents and maintain state across interactions, entirely new patterns of AI-powered applications become possible.

Is implementing MCP servers challenging? Absolutely, especially if you're used to stateless architectures. But the power it unlocks for AI integration makes it worth the effort. As tooling continues to mature, I expect we'll see MCP become increasingly accessible to developers of all types.

For anyone building AI-powered applications today, understanding MCP isn't optional – it's quickly becoming the foundation for how AI agents interact with our systems. Whether you're implementing it today or planning for tomorrow, it's worth understanding what makes this protocol unique and powerful in the AI era.

If you want to chat, hit me up on LinkedIn, either via DMs, or directly on the feed 😊

Further Reading

To deepen your understanding of API protocols and the MCP ecosystem, here are some valuable resources: